Transcending the memory limitations of single GPU with PreonLab 6.1

With every PreonLab update, we aim to continuously enhance your simulation experience by increasing efficiency and reducing the memory footprint.

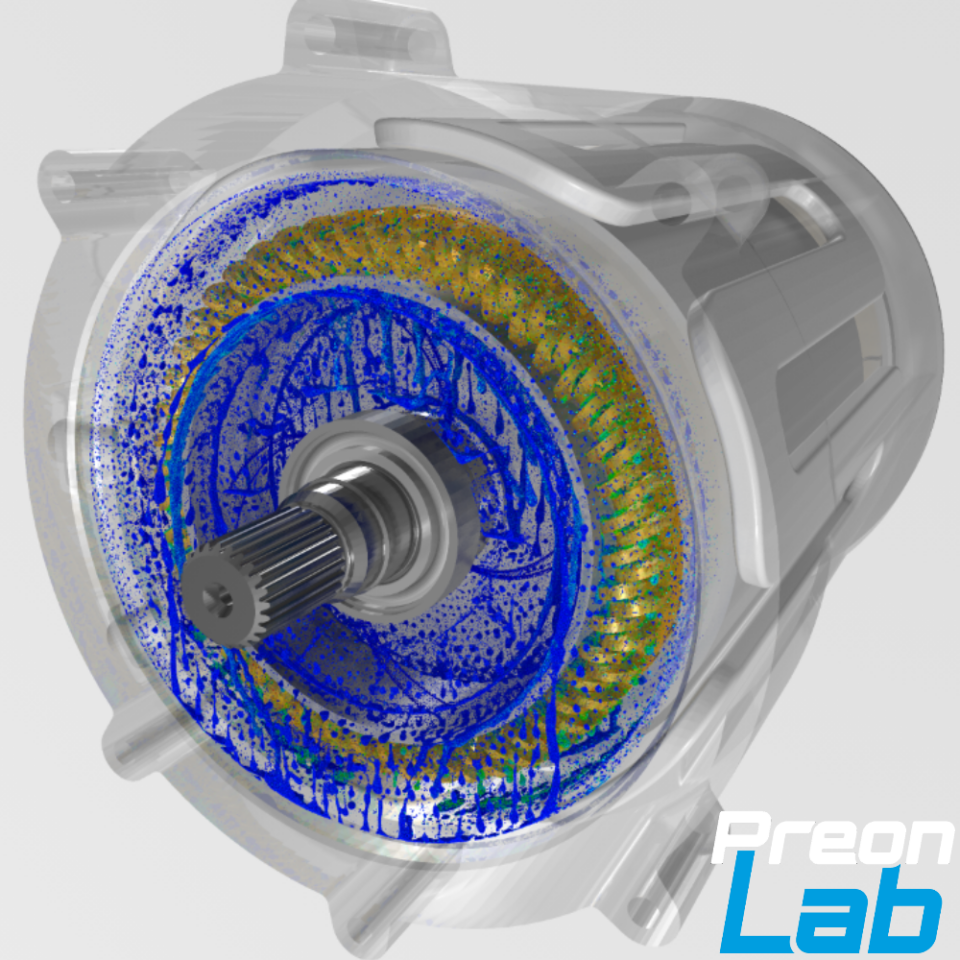

While simulation on CPU is still the bread and butter for CFD, it is undeniable that GPUs can provide a significant performance boost towards reducing computation time.

Nevertheless, one advantage CPUs generally have over GPUs is a larger memory space. Due to the limited memory of single GPU cards, simulating very large scenes with a lot of particles can be quite challenging. In addition, importing large tensor fields like airflows can also occupy a lot of precious memory space on the graphic card. While PreonLab can cleverly resample such airflows to fit on single GPU hardware, there will always come a point when sacrificing accuracy will be inevitable.

One logical solution is making use of state-of-the-art GPU hardware that can accommodate large simulation scenes. Professional GPU cards like Nvidia’s H100 GPUs can already offer memory space up to 80 GB. However, does this mean that GPU simulations are only possible through the acquisition of larger and larger professional GPU cards? And what about scenes which might require even more memory space than the latest hardware available?